Machine Learning for Real Life: Clustering to Save Time

Machine learning can seem like something reserved for large companies and cutting-edge AI projects, but I recently used it in a simple, practical way that saved me hours of manual effort. This situation came about organically (I promise!). When I was learning about machine learning algorithms the first time, I would have appreciated more realistic and approachable use cases which I hope to show here. I want to share how I applied clustering—an often-overlooked technique—to solve a real-world problem.

As someone who’s interested in AI/ML, I’ve seen countless examples of how large companies use machine learning to optimize supply chains, improve recommendations, or detect fraud. Those examples are impressive, but I also want to understand how these techniques can be useful in my day-to-day work.

The Task

I had a large number of documents to organize for another team to ultimately deliver insights. There were a few complications to this task that made it less “clean”:

- I had little contextual information about the documents.

- There was no metadata (no authors, no labels, nothing).

- I only had the raw content of the documents.

- I needed a way to make sense of them so I could ask more informed questions of the team that had the expertise.

Manually reading and sorting these documents was not going to be possible in the timeframe I had, so I turned to machine learning.

A Quick Primer on Clustering

I ended up using unsupervised learning for this task, which is an area of ML that I believes deserves much more attention. In unsupervised learning, instead of training a model with labeled examples, the algorithm identifies patterns on its own.

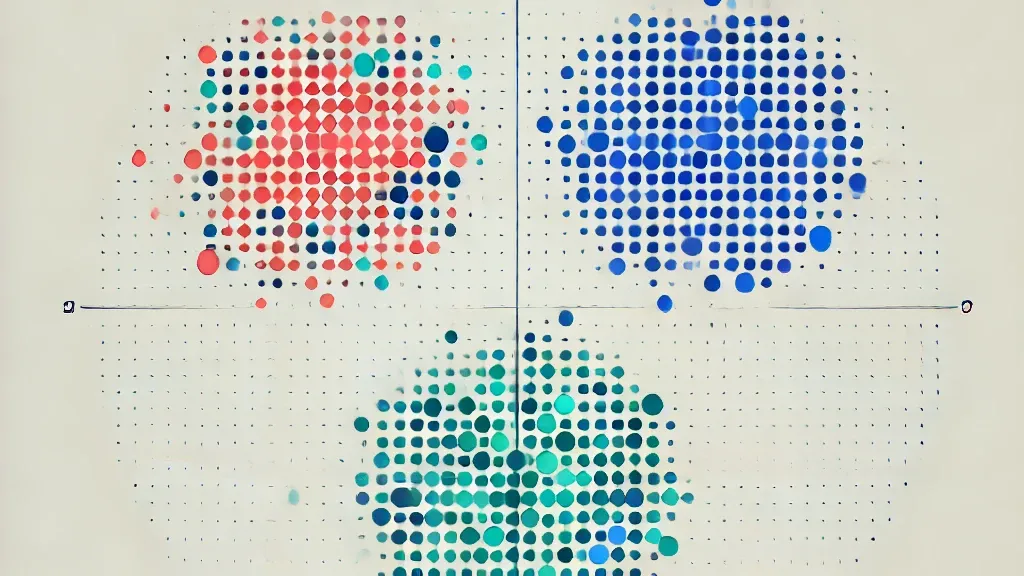

Clustering is a powerful technique in this category. As the name suggests, it groups similar data points together. This is powerful because instead of manually labeling or sorting data, you let the algorithm surface structure in the data for you.

How I Used Clustering to Solve My Problem

I used clustering to categorize my documents into meaningful groups, which allowed me to approach subject matter experts with organized questions rather than a chaotic pile of text.

- Vectorizing the documents – Since machine learning models don’t understand raw text, I first converted each document into a numerical format that represents its meaning. It took me a single line of code (for those interested, I used scikitlearn’s Tfidf vectorizer).

- Applying clustering algorithms – I tested a few approaches and found that a combination of DBSCAN (a clustering algorithm focused on finding dense regions of data and expanding outward) and K-means (good for forming distinct clusters) worked best for grouping my documents into meaningful categories. It had been a minute since I studied these algorithms in depth, so I will caveat that my choices were not very scientific. I was looking for quick and, to the eye, meaningful grouping of data, so I was not as concerned about using the “best” approach for this particular dataset.

- Tuning parameters – Like any ML approach, clustering is not perfect out of the box. I adjusted hyperparameters (settings that influence how the model groups data) to improve the results.

Big Picture

Situations like this one, where we are faced with a deluge of information, is the reason why the profession of data science now exists.

I didn’t need a massive dataset or a deep learning model—just the right approach to get more clarity on my problem. Instead of blindly asking for help, I could say, “These documents seem to fall into five main categories—does that align with what you’d expect?” That small shift made a huge difference.